2026 Tech Provocations

10 Really Uncomfortable Questions Leaders and Builders Must Answer This Coming Year

Executive Summary: The Great Recalibration

If 2025 was full of AI claims and bold announcements, 2026 marks AI’s transition from portfolio experiment to balance-sheet and execution reality.

The AI pilot-to-scale P&L gap. McKinsey reports only 6% of organizations achieve meaningful EBIT impact from AI, while 88% run pilots in at least one function. AI usage is growing faster than costs are falling, forcing organizations to confront unit economics that don’t work at scale. Inference costs, energy bills, and infrastructure capex are colliding with profitability pressure. The question is not “Are we doing AI?” but “Can our business model survive AI at production scale?”

Enterprise-scale transformation drives real results. Not AI. AI high performers invest in transformation and workflow redesign, not just bigger models. They apply systems thinking: identifying the true constraint in end-to-end flows and improving throughput at that bottleneck, rather than locally optimizing isolated steps that don’t move enterprise economics. Digital provenance—verifying the origin and integrity of AI outputs—is now core to competitive position. As sovereignty rules tighten, executives face a new KPI: can you prove your model’s training data, lineage, and compliance posture on demand? Trust and provenance, not scale, now define competitive advantage.

AI sovereignty shifts from rhetoric to strategic imperative. The EU AI Act’s prohibitions and general-purpose AI obligations took effect in 2025, with high-risk requirements delayed until 2027 following industry pressure. While the US and China drive toward full autonomy and strategic influence, others pursue regulatory-led frameworks (EU), strategic partnerships (UK, UAE Stargate initiative), or cognitive and linguistic autonomy (UAE, India, Singapore). Multinationals now navigate a fragmenting technological and regulatory landscape.

Agentic AI expands attack surfaces while eroding accountability. Systems that autonomously orchestrate workflows introduce structural vulnerabilities—prompt injection, model poisoning, insecure outputs—as default conditions. Decision speed outpaces human oversight. Regulatory frameworks demand explainability, but technical complexity makes true human-in-the-loop oversight a mere fiction in real-time environments. Organizations inherit exponential security risk without corresponding governance capabilities.

Resource scarcity dictates AI infrastructure strategy. Data-center electricity demand will more than double by 2030, driven by AI workloads. Electricity costs in areas near data centers have increased up to 267% compared to five years ago. Hyperscalers are purchasing nuclear power plant capacity and investing in small modular reactors, while data center operators repurpose commercial aircraft jet engines into gas turbines for interim power generation—a direct response to five-to-ten-year grid interconnection delays. Competition for energy resources and critical minerals is intensifying geopolitical friction.

In short:

Compute is scarce, energy political, and sovereignty strategic.

Security and trust, not scale, are the new competitive frontiers.

Human capital is fragmenting into ecosystems, not hierarchies.

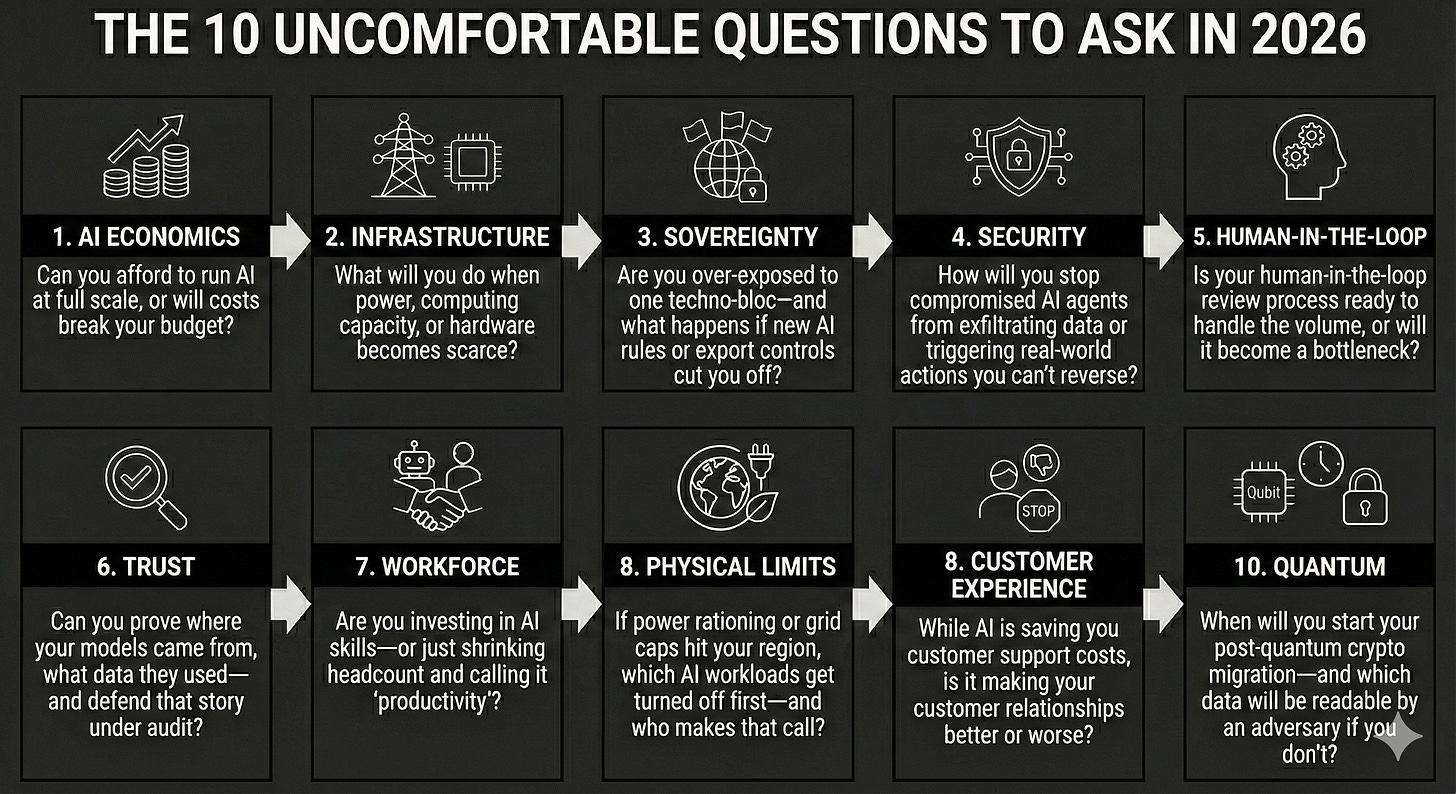

The 10 Uncomfortable Questions To Ask In 2026:

AI Economics: Can you afford to run AI at full scale, or will costs break your budget?

Infrastructure: What will you do when power, computing capacity, or hardware becomes scarce?

Sovereignty: Are you over‑exposed to one techno-bloc—and what happens if new AI rules or export controls cut you off?

Security: How will you stop compromised AI agents from exfiltrating data or triggering real‑world actions you can’t reverse?

Human-in-the-Loop: Is your human-in-the-loop review process ready to handle the volume, or will it become a bottleneck?

Trust/Provenance: Can you prove where your models came from, what data they used—and defend that story under audit?

Workforce: Are you investing in AI skills—or just shrinking headcount and calling it ‘productivity’?

Physical Limits: If power rationing or grid caps hit your region, which AI workloads get turned off first—and who makes that call?

Customer Experience: While AI is saving you customer support costs, is it making your customer relationships better or worse?

Quantum: When will you start your post‑quantum crypto migration—and which data will be readable by an adversary if you don’t?

The 2026 Provocations: Ten Questions for the C-Suite

The sequence moves from foundational viability (money, law, security) to operational reality (infrastructure, workforce, governance) to market impact (customer experience, trust) and future resilience (quantum, sustainability).

1. AI Economics: Can you afford to run AI at full scale, or will costs break your budget?

McKinsey’s 2025 State of AI found that only 6% of organizations are “AI high performers” achieving 5%+ EBIT impact, while 88% report use in at least one function—revealing a massive pilot-to-scale gap. Deloitte’s 2026 Tech Trends reports that AI usage is growing faster than costs are falling, with organizations hitting a tipping point where cloud services become cost-prohibitive for high-volume workloads.

The question isn’t whether you are running enough AI initiatives; it’s whether your unit economics can sustain them when inference costs, energy bills, and infrastructure capex collide with profitability pressure. Organizations that accelerate one workflow step with AI while creating downstream bottlenecks may worsen economics rather than improve them—optimizing locally without addressing the system-level constraint.

Next step: Run a unit-economics model for your top three AI use cases at 10x current volume. Calculate the all-in cost per transaction (inference + infrastructure + energy + labor) and compare it to the marginal revenue or cost savings. If the math doesn’t work at scale, identify which constraint must change—pricing, architecture, or scope.

2. Infrastructure: What will you do when power, computing capacity, or hardware becomes scarce?

The IEA projects global data-center electricity demand will more than double by 2030, driven primarily by AI workloads, with power, water, and grid constraints forcing governments to ration access. Deloitte observes that leading organizations are implementing three-tier hybrid architectures (cloud/on-premises/edge) and building purpose-built AI data centers because existing infrastructure cannot be retrofitted fast enough. McKinsey’s analysis shows that enterprises with high-performing IT organizations achieve 35% higher revenue growth—but only if technology strategy and execution are strong, which now depends on physical infrastructure, not just software.

Next step: Audit your infrastructure dependencies right away. Map every AI workload to its compute source (cloud region, on-prem, edge) and energy supply. Identify single points of failure—GPU allocation caps, power contracts, cooling capacity. Build a contingency plan: What gets prioritized if capacity is rationed? Which workloads can move to lower-cost inference? Where can you secure long-term compute or power commitments?

3. Sovereignty: Are you over‑exposed to one techno-bloc—and what happens if new AI rules or export controls cut you off?

Gartner predicts that by 2027, 35% of countries will be locked into region-specific AI platforms, ending the era of borderless AI. The EU AI Act’s general-purpose AI (GPAI) obligations began in August 2025, with high-risk system requirements phasing through 2026–2027, forcing immediate decisions on model choice, data residency, and compliance architecture. Global enterprises now need modular, sovereignty-aware infrastructure to run AI across the U.S., EU, and Asia without rebuilding.

In APAC, sovereignty constraints compound adoption friction: lack of in-country model endpoints, concerns about IP leakage in cross-border flows, and audit requirements that assume cloud infrastructure equals U.S. jurisdiction by default. Organizations should distinguish between genuine sovereignty constraints and capability gaps. If you cannot operationalize AI even in low-risk internal domains where data residency is manageable, your real constraint is governance and industrialization capacity, not infrastructure location.

Next step: Build a sovereignty dependency map for every AI system in production or pilot. Document: model provider, training data jurisdiction, inference location, data residency requirements, and regulatory obligations by geography. Identify which systems would break if a single vendor or jurisdiction became unavailable. Develop a modular architecture roadmap that allows you to swap models or move workloads across regions without rebuilding your entire stack.

4. Security: How will you stop compromised AI agents from exfiltrating data or triggering real‑world actions you can’t reverse?

Gartner’s 2026 trends highlight agentic AI and multi-agent systems as core to enterprise workflows, but Deloitte warns that AI-driven cyberattacks are forcing organizations to embed security at every step and “defend just as aggressively as they innovate”. The OWASP Top-10 for LLM Applications identifies prompt injection, insecure output handling, and model supply-chain vulnerabilities as default risks, not edge cases—and agentic systems multiply both the attack surface and blast radius.

Next step: Conduct an AI security audit using the OWASP Top-10 for LLM Applications as your checklist. For every agentic system: map what data it can access, which APIs it can invoke, and what workflows it can trigger autonomously. Implement guardrails: input validation, output sanitization, least-privilege access, and audit logging. Establish clear accountability: who is responsible when an agent makes a decision that causes harm or violates policy?

5. Human-In-The-Loop: Is your human-in-the-loop review process ready to handle the volume, or will it become a bottleneck?

When agentic AI accelerates upstream workflows, human review often becomes the new throughput constraint. Organizations that treat HITL as an afterthought—rather than a deliberately designed control system with risk-tiering, sampling logic, queue management, and staffing models—discover that automation created a worse bottleneck than it solved.

Theory of Constraints teaches that improving one step without addressing the system bottleneck simply moves the constraint. Organizations need to design HITL deliberately: use risk-tiering (low-risk automated, medium-risk sampled review, high-risk mandatory review), design for queue health (SLA, backlog, staffing), and make exceptions explicit (what constitutes “stop the line”). Regulatory frameworks like the EU AI Act demand explainability, but technical complexity makes true human oversight difficult in real-time environments without deliberate system design.

Next step: Map your end-to-end AI workflow and identify where human review sits. Measure current queue depth, review SLA, and approval throughput. Design a risk-tiering framework: What decisions can be fully automated (low risk)? Which require sampling (medium risk)? Which require mandatory review (high risk)? Define escalation rules and “stop the line” criteria. Staff accordingly—or redesign the system so the constraint doesn’t kill your throughput.

6. Trust: Can you prove where your models came from, what data they used—and defend that story under audit?

McKinsey reports that AI high performers invest heavily in transformation best practices and workflow redesign—not just bigger models. Gartner’s 2026 trends emphasize “digital provenance” (verifying origin and integrity of AI-generated content) and governance as core to maintaining public and stakeholder trust, especially as AI sovereignty rules require proof of model lineage and policy conformance. As the EU AI Act GPAI obligations take effect, executives face a new requirement: demonstrate your model’s provenance, fine-tuning history, and compliance posture on demand.

Next step: Create a provenance registry for every AI model in use. Document: base model source, training data lineage, fine-tuning history, version control, and compliance attestations. Implement logging for all AI-generated outputs so you can trace decisions back to model version, input data, and timestamp. When a regulator, customer, or auditor asks “How do you know this is trustworthy?” you answer with data, not assurances.

7. Workforce: Are you investing in AI skills—or just shrinking headcount and calling it ‘productivity’?

Gartner predicts that by 2027, 80% of the engineering workforce will need to upskill, yet McKinsey’s 2025 data shows only 28% of organizations are investing in formal reskilling programs. The World Economic Forum estimates 60% of workers require training before 2030, but traditional Learning & Development programs are too slow for the velocity of change in AI-exposed roles.

As AI reduces the labor intensity of delivery work previously outsourced to system integrators, organizations face a choice: build internal capacity to absorb that work, or maintain outsourcing models while claiming strategic control. The workforce transition requires deliberate investment in capability development, not just cost reduction.

Next step: Identify roles most exposed to AI disruption in the next 12 months. For each role, define the new capability profile: What skills remain critical? What new skills are required to manage, validate, or collaborate with AI systems? Launch targeted upskilling programs—not generic “AI literacy” but role-specific, hands-on training. Measure capability development as rigorously as you measure headcount reduction.

8. Physical Limits: If power rationing or grid caps hit your region, which AI workloads get turned off first—and who makes that call?

The IEA confirms data center power demand is doubling, and regions are beginning to prioritize power allocation. Deloitte and Gartner note that “siting” is now a strategic differentiator; if you cannot secure power, you cannot deploy compute. This is no longer a cost issue; it is binary availability.

Next step: Assess your energy exposure now. Where are your critical AI workloads hosted? What are the power constraints in those regions? If your cloud provider or data center faces energy rationing, which workloads would be capped first? Develop a load-balancing strategy: Can you shift workloads to regions with surplus capacity? Can you optimize inference efficiency to reduce compute demand? Secure long-term power commitments for mission-critical systems before capacity becomes scarce.

9. Customer Experience: While AI is saving you customer support costs, is it making your customer relationships better or worse?

Forrester predicts that in 2026, one-third of brands will erode customer trust by deploying premature AI self-service agents. Consumers are responding to low-quality digital interactions: 52% of U.S. online adults now actively seek offline, tactile experiences to escape digital friction.

Next step: Measure AI’s impact on customer lifetime value, not just cost-to-serve. Track: customer satisfaction scores, escalation rates, resolution time, and repeat purchase behavior for AI-assisted vs. human-assisted interactions. If your chatbot is driving customers away, you’re optimizing the wrong metric. Run A/B tests: Does AI enhance the experience, or does it create friction? Be willing to pull back AI in high-value touchpoints if it erodes trust and loyalty.

10. Quantum: When will you start your post‑quantum crypto migration—and which data will be readable by an adversary if you don’t?

The EU has set a 2026 deadline for organizations to begin transitioning to Post-Quantum Cryptography (PQC), with full critical infrastructure completion due by 2030. NIST finalized PQC standards in late 2024, triggering immediate compliance requirements for global enterprises. “Harvest-now-decrypt-later” attacks mean any data you encrypt today with standard RSA/ECC is vulnerable if it has a shelf life exceeding five years.

Next step: Inventory every system using RSA or ECC encryption. Prioritize data with long shelf lives—financial records, intellectual property, health data, contracts—anything that must remain confidential beyond 2030. Begin pilot migrations to NIST-approved PQC algorithms. Establish a migration roadmap with milestones: Which systems transition in 2026? Which by 2028? Which require vendor upgrades? Don’t wait—adversaries are harvesting encrypted data today for future decryption.

How about you? What’s on your plate for 2026?

Thanks for reading!

Damien

I am a Senior Technology Advisor who works at the intersection of AI, business transformation, and geopolitics through RebootUp (consulting) and KoncentriK(publication): what I call Technopolitics. I help leaders turn emerging tech into cashflows while navigating today’s strategic and systemic risks. Get in touch to know more damien.kopp@rebootup.com

These questions force leaders to confront AI’s economic, operational, and ethical realities before scaling