Winning the Battle for Trust When Truth is Fragmented

Quantum Narratives and Corporate Chaos: Why Business Leaders Must Rethink Truth, Trust, and Strategy in the Technopolitical Age

This essay explores how AI-powered algorithms and digital platforms now shape the narratives that define reputation, legitimacy, and influence. Are these systems ideologically and politically neutral? Or are they optimized to amplify clicks, revenue, outrage, reinforce ideology, and manipulate public sentiment?

If truth is no longer singular, but fractured across perspectives, platforms, and algorithms, how are AI, media systems, and data governance fragmenting authority and stability?

Importantly, why corporate leaders should care and what can they do about it?

You’ve built crisis protocols. You’ve hired cybersecurity experts. You’ve aligned your ESG narrative and digital strategy.

But what if none of that matters when a coordinated online campaign mixing truth, distortion, and emotional resonance reframes your company as a villain before you even know you’re under attack?

What if your stakeholders (customers, regulators, employees, investors) each see a different version of your story, and none of them are under your control?

What happens when your narrative infrastructure becomes your weakest link?

What just happened?

On the morning of December 4th, 2024, in Châtillon near Paris, Nicolas Maes and his leadership team received confirmation of a disaster in the making. Maes had been at the helm of Orano, France’s flagship nuclear company since November 2023.

The Nigerien state had taken operational control of the SOMAÏR uranium mine in Arlit. No new law had been passed, no international ruling issued. Just a quiet replacement of personnel, a cutoff of access, and a break in the line of operational control. The company’s official press release was sober: “decisions previously made by Orano are no longer being respected” [source].

To anyone unfamiliar with Orano’s presence in Niger, the news might have seemed like a bureaucratic hiccup. But in reality, this marked the culmination of a months-long narrative assault. Orano had operated in Niger since 1971, long before its 2017 restructuring from Areva. The SOMAÏR mine was one of the country’s largest industrial employers, and uranium from Niger remained critical to France’s nuclear energy program [source].

Yet this wasn’t a dispute over mining rights. It was something more difficult to confront: a loss of legitimacy, engineered through perception.

The trouble began in the summer of 2023. On July 26th, Niger’s elected president, Mohamed Bazoum, was ousted by General Abdourahamane Tchiani in a military coup [source]. The junta quickly pivoted Niger’s foreign policy. France was cast as a neo-colonial force exploiting Africa’s natural resources. And Orano, with its deep uranium footprint, became the perfect symbol of that narrative.

But the junta didn’t act alone.

Almost immediately, Russian-backed information networks began circulating anti-French, anti-Orano messages. Central to this effort was a loosely affiliated set of pro-Russian syndicates, most notably the SDA (Social Design Agency). This group, amplified by bot networks, Telegram channels, and pan-African influencers, launched a coordinated campaign accusing France of economic imperialism and Orano of “illegal” and “irresponsible” uranium extraction [source].

SDA-aligned messaging framed the junta’s moves as heroic acts of sovereignty. They praised Niger’s resource nationalism while simultaneously promoting Russia as a liberator and economic partner. Nigerien Mining Minister Colonel Ousmane Abarchi gave repeated interviews to Russian outlets like RIA Novosti, accusing Orano of disrespecting Niger’s sovereignty and mismanaging its resources—statements that were replayed and remixed across TikTok, Facebook, and Telegram [source, source].

By April 2024, SDA channels were actively promoting offline demonstrations outside uranium facilities, linking imagery of small, peaceful protests with highly charged narratives about neocolonial oppression. The videos were cut to resonate emotionally: mothers holding signs, miners waving the Nigerien flag, slow-motion pans over mine gates with music invoking pan-African liberation. None of it was illegal. All of it was effective [source].

Inside Orano, the warning signs were visible by October 2024. The company announced that SOMAÏR operations would be suspended due to financial strain, a decision triggered by disrupted logistics and uncertain authorizations [source].

But the narrative outside the company had already shifted. In the digital sphere, Orano had already been tried and convicted. By the time security forces took control of the site in early December, most Nigeriens saw it as overdue justice.

Six months later, on June 19, 2025, General Tchiani made it official: the SOMAÏR mine was nationalized [source]. The assets were transferred to the state. Russia’s state-linked media and affiliated analysts praised the decision as a “turning point for African sovereignty.” And within weeks, Niger opened its doors to Rosatom and other Russian uranium investors [source].

Orano, once confident in its contracts and financials, had filed for international arbitration, but the uranium was gone. The site was operational under new authority. The French state’s diplomatic reaction was muted.

The story had already been written and it wasn’t theirs.

This was not a legal defeat. It was a loss of control on the narrative. Orano’s assets weren’t lost in courtrooms or boardrooms. They were lost in the comment sections, the Telegram feeds, the influencer circles, and the subtle interplay between physical demonstration and digital disinformation. Orano never truly entered that arena. And by the time it realized the rules had changed, its presence in Niger had already been redefined by others.

What happened to Orano wasn’t a one-off. It was a narrative warfare. One that can (and will) be applied to other companies, in other sectors, across other geographies.

In a world where perception is programmable and algorithms are weaponized, narrative (not fact) is becoming the terrain on which geopolitical and corporate power is contested.

Orano didn’t lose control of SOMAÏR because it failed operationally. It lost because someone else told a better story first.

Your Crisis Playbook Is Obsolete

In today’s chaotic media landscape, corporate leaders are not just brand stewards: they’re narrative system architects.

Originally coined to describe the rise of populist movements, the concept of quantum politics refers to a world where truth is fragmented, emotions override logic, and every audience sees a different version of reality. It’s no longer confined to politics. It now governs boardrooms, earnings calls, press releases, Slack threads, and TikTok feeds.

The term “quantum politics” has gained traction in recent years (notably with Giuliano da Empoli’s book The Engineers of Chaos - 2019, LATTES), but its intellectual roots go back to political scientist Theodore Lewis Becker, who in the early 1990s argued that the Newtonian assumptions of political science (predictability, rationality, linear causality) no longer matched the messiness of real-world politics. In his book Quantum Politics: Applying Quantum Theory to Political Phenomena, Becker proposed a new metaphorical model: one where uncertainty, contradiction, and emotional dynamics are not anomalies: they are the system.

His view? Just as quantum physics replaced Newtonian mechanics, political and social understanding must evolve beyond mechanical models to account for ambiguity, entanglement, and participatory observation.

Every brand is now entangled in a media ecosystem where:

Truth is not fixed but probabilistic

Stakeholders interpret through emotional filters

Narratives spread faster than facts

This is no longer business-as-usual communication. It’s communication in a quantum system.

From Newton to Quantum: The Communication Shift That Changed Everything

Becker’s critique of Newtonian political thought - rooted in Enlightenment values of objectivity, rationality, and causality - applies equally to corporate communication. Businesses too have long operated as if messaging could be linear, audiences rational, and perception managed through control.

But just as Becker described in his quantum framework, stakeholders now operate in a probabilistic environment, where messages can hold multiple meanings, emotional response trumps logic, and the very act of communication alters the behavior it tries to shape.

In the Newtonian era of corporate comms:

Messages were rational, linear, and fact-driven.

Control was central.

Press releases shaped perception.

Quantum communication recognizes:

Messages are multivalent

Emotion overrides logic

Control is distributed across platforms and code

You cannot fight modern disinformation or narrative attacks with legacy PR tactics. You need to accept that your message will be reinterpreted, atomized, and weaponized.

A few eye opening facts below show the extend of the challenge:

Only 31% of U.S. adults report at least "a fair amount" of confidence in mass media, while 36% express no trust at all (Gallup 2024).

Misinformation and “truth fragmentation” are ranked among the top global risks (WEF Global Risks Report, 2019).

More than half (54%) of people get news from social media platforms like Facebook, X and YouTube - overtaking TV (50%) and news sites and apps (48%), (Reuters Institute)

False tweets are 70% more likely to be reshared than truthful ones (MIT study)

Social media users are nearly twice as likely to share negative headlines (Nature)

Over half of the top 100 TikToks on mental health contain misinformation (Guardian)

In this reality, brand perception is volatile, and your message must function across highly personalized and emotional ecosystems.

The Enlightenment gave us truth as a shared destination. Today, we must learn to navigate truth as a shifting terrain where trust is the compass, and narratives are the terrain. This is the age of Quantum Narratives.

As Shoshana Zuboff explains in The Age of Surveillance Capitalism, today’s media systems don’t just deliver content, they extract behavioral data, shape emotional states, and engineer attention:

“The game has shifted from using data to serve customers to using customers to serve data.”

In this environment, companies no longer fully control their narrative: they are participants in a system that prioritizes virality over truth, engagement over nuance.

Corporate messaging is co-constructed with algorithms, reactions, and micro-targeted emotional triggers.

This isn't communication as we know it anymore: It's quantum entanglement!

The Technopolitical Engine of Narrative Control

This is where technopolitics becomes central: platforms don’t just carry content. They carry ideology. They don’t just mediate information, they structure power. Platforms now act as extensions of state influence, ideological filters, and market shapers all at once.

Drawing on Asma Mhalla’s framing of Total Technology in her book Technopolitique (2024) and subsequent interviews (Philonomist, Le Monde), we understand Big Tech as the new geopolitical actor:

“The relationship between major platforms and states has become organic. Large technology companies now act as forward-operating arms, executing the geopolitical objectives of the states they are tied to. They provide the technical infrastructure, computational power, and surveillance capacity, while the state grants them legitimacy, legal immunity, and regulatory protection.”

— Asma Mhalla, Technopolitique (2024)

As well as:

“This pact between Big Tech and Big State constitutes a form of hybrid sovereignty. Major decisions are no longer made in democratic arenas but within technical architectures, under the guise of neutrality. This is not a drift—it is an explicit political project.”

— Asma Mhalla, Technopolitique (2024)

So, your infrastructure is now political. And the narrative field is a battlefield.

Large Language Models as the New Narrative Machines

LLMs act as probabilistic narrative engines, collapsing multiple possible worldviews into a single answer path, biased by their training corpus and cultural center of gravity as research shows (see “further reading”).

Cultural bias and alignment

Most models, regardless of their origin, aligned closely with cultural values characteristic of English-speaking and Protestant European countries. Chinese LLMs were expected to reflect Confucian values (e.g. collectivism, authority), but did not — instead, they showed a bias toward individual autonomy, tolerance, and environmentalism, typically Western liberal values.

Even LLMs trained on “uncensored” datasets (e.g. Dolphin models) showed cultural convergence toward Western individualist values. Probably not very surprising knowing 49.7% of the websites are in English, while English speaking people represent 4.7% of the world population…

"Rather than adapting to cultural plurality, these LLMs tend to default to a normative Western framework, particularly shaped by U.S. liberal norms."

— Columbia University, Cultural Fidelity in LLMs (2024)

600 parallel translation examples from 10 languages, GPT-4 gave culturally inappropriate or inaccurate translations up to 27.8% of the time. For example, translating culturally-specific idioms (e.g., African proverbs) into English often resulted in loss of meaning or Westernized reinterpretation.

“GenAI has transformed how language is produced and transmitted: there is high risk that we will over time propagate Western cultural values and reasoning more so than others; and erode of cultural diversity in digital communication is a threat to diversity of thought”

— Shav Vimalendiran (2024)

So at minimum, the above shows a risk of cognitive uniformisation and a loss of rich historical and cultural nuances.

Encoding Ideology into LLMs

“Truth” in LLMs is shaped by presence in training corpora, not ground reality: it’s a quantum narrative distortion. One of the strongest causal links to digital asymmetry shaping cultural fidelity in LLMs.

Musk said the upcoming Grok 3.5 model will have “advanced reasoning” and wanted it to be used “to rewrite the entire corpus of human knowledge, adding missing information and deleting errors.”

But who gets top decide what’s right or wrong? what’s good or bad?

Generative AI becomes a vector of digital assimilation: values are encoded into code, not debated in context.

If so, are LLMs colonial instruments? This is where technopolitics meets cognitive colonization.

Cognitive Colonialism

This is not colonialism in the classical sense. But it is a form of digital standardization: an algorithmic flattening of moral, cultural, and cognitive diversity in favor of a default.

This is why we must ask ourselves:

Are LLMs becoming ideological infrastructures promoting culture, not just computation?

If yes, the implications are not just technical. They are civilizational.

As Asma Mhalla writes in Technopolitique:

“The technologies they [BigTech] bring to market are not ideologically neutral… The way a technological foundation is designed, conceptualized, and governed; the datasets injected, the technical, ethical, or moral filters applied; all encode intrinsic biases and ideological positions.”

Further reading

These papers reveal that LLMs disproportionately reflect Western, liberal, individualist values—regardless of model origin (even Chinese models). This is not accidental. It stems from:

Training data availability (which is skewed toward high-resource, mostly Western languages)

Biases in alignment tuning (mostly done in English, by U.S.-centric teams)

Digital representation asymmetries (some cultures are absent or misrepresented in training data)

This entrenches a digital-cultural hierarchy—a form of algorithmic imperialism.

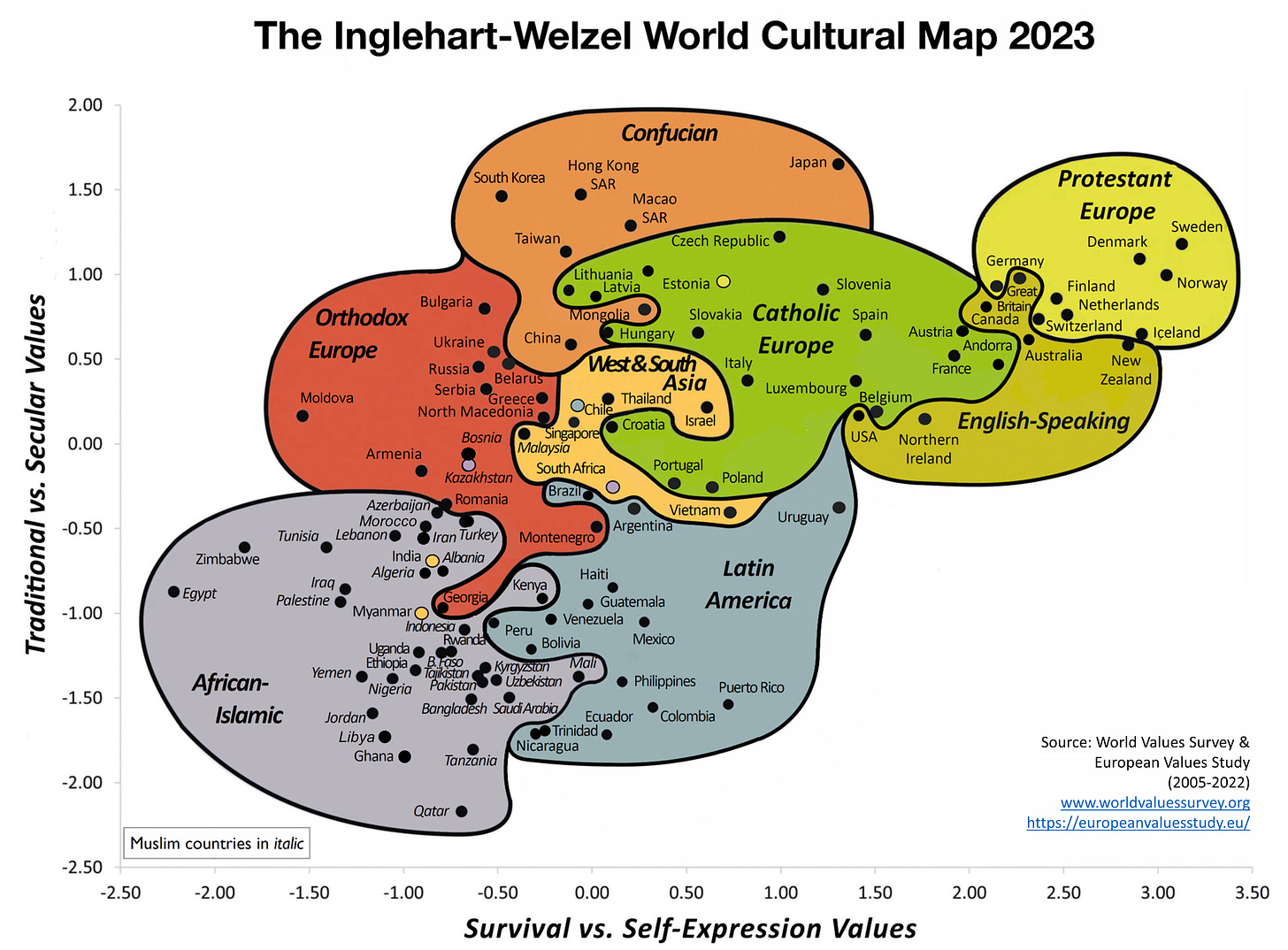

Inglehart-Welzel Cultural Map is a good visual to understand cultural and religions in relation to one another.

Cultural Bias and Cultural Alignment of Large Language Models (Yan Tao, Olga Viberg, Ryan S. Baker, René F. Kizilcec)

Cultural Fidelity in Large-Language Models: An Evaluation of Online Language Resources as a Driver of Model Performance in Value Representation (Sharif Kazemi, Gloria Gerhardt, Jonty Katz, Caroline Ida Kuria, Estelle Pan, Umang Prabhakar)

How Deep Is Representational Bias in LLMs? The Cases of Caste and Religion (Agrima Seth, Monojit Choudhary, Sunayana Sitaram, Kentaro Toyama, Aditya Vashistha, Kalika Bali)

Narrative Sovereignty is Now a Corporate Risk

One thing the above has shown is that platforms (information conduits) and AI models (filters, amplifiers and now generators of information) are not neutral.

But what does it mean for corporations and business leaders?

I believe what starts as data imbalance becomes in fact a corporate liability when scaled across products, services, and communication.

Most companies (and governments!) don’t own their narrative engine. They are being disintermediated by the big platforms and the proprietary AI running on them.

Narrative attacks now affect the entire enterprise value chain, not just perception, but operations, partnerships, licensing, market access, and even capital flow.

Business Risks include:

M&A disruption: valuation collapse via narrative manipulation

Regulatory pressure: laws shaped by viral framing

Competitive asymmetry: rivals aligned with state actors controlling the story field

Talent erosion: employees influenced by cultural counter-narratives

If you don’t control your narrative environment, you can lose deals, licenses, markets, and trust, even when you’ve done nothing wrong.

Marketing Drift: Multinational brands using GenAI to localize content at scale risk producing tone-deaf, misaligned, or offensive outputs. Western-coded assumptions about gender, autonomy, humor, or social norms may alienate local audiences, reinforce stereotypes, or trigger public backlash.

AI agents: They just don’t understand the World they are in. AI agents or copilots, designed to automate workflows or engage with customers, often operate with an implicit Anglo-American frame which may be inappropriate or even dangerous in contexts like:

Regulatory compliance (e.g. data sharing norms in MENA or APAC)

Political neutrality (e.g. chatbot responses about Hong Kong or Gaza)

Cultural taboos (e.g. references to religion, gender, sexuality)

LLMs do not just answer: they answer in ways that belong somewhere. And that somewhere is almost always the Global North.

Ethical & Legal Exposure: When autonomous systems make decisions based on encoded assumptions, the absence of local cultural alignment becomes an accountability minefield:

Is the AI reflecting user intent or overriding it?

Who owns the harm when the AI says the “wrong thing” in the “wrong place”?

The question every corporate leader must ask is: how do I regain control over my narrative?

Building Narrative Intelligence as a Strategic Function

I argue here that building narrative sovereignty is about understanding its dependencies and associated risks to regain control in strategic areas. And it’s not just a the job of Marketing or Corporate Comms to figure out '“the messaging”.

Companies need a dedicated capacity to:

Detect disinformation threats

Map narrative terrain across platforms

Build counter-positioning playbooks

Simulate crisis scenarios under information distortion

We need to treat narrative like cyber: an enterprise-wide risk with technical, legal, reputational and strategic dimensions.

In a world governed by predictive models, sovereignty is no longer only about infrastructure, compute, or data residency. It is about narrative alignment.

It is not enough for an AI system to be technically accurate. It must be culturally situated and politically accountable.

It’s a new muscle to be built: narrative sovereignty requires cognitive reflexivity.

Owning or controlling internal knowledge infrastructures is as strategic as financial assets otherwise, your corporate truth is mediated by someone else’s algorithms.

Operationalizing the Response: R.I.S.Q.™ as a Strategic Framework

To help corporate leaders navigate this new terrain, I have created the R.I.S.Q.™ framework.

Reframe: Stop seeing this as a PR issue. It’s geopolitical and strategic.

Intent: Identify who benefits from distorting your story.

Secure: Protect both your digital infrastructure and your narrative infrastructure.

Question: Stress-test your assumptions: are your values and positions narratively defensible?

In short: This is not about better messaging; it’s about strategic resilience. R.I.S.Q.™ is your compass for navigating contested realities.

For more deep dives into the R.I.S.Q framework and a personalized assessment please get in touch with me.

Your 30 / 90 / 12 Month Playbook

My proposal on how to draw a media intelligence roadmap for building trust architectures:

Narrative observability layers

Disinformation detection as core compliance

Probabilistic trust models (inspired by quantum logic)

Media provenance & AI watermarking standards

In 30 Days:

Conduct a narrative risk audit

Brief leadership on exposure to technopolitical threats

In 90 Days:

Set up cross-functional Narrative Ops taskforce

Run a crisis simulation focused on AI-misfire or disinfo attack

In 12 Months:

Embed narrative observability into all high-risk business lines

Build capability to shape, defend, and recover narrative coherence at speed

Final Call to Action

In his book Wasteland (2025), Robert Kaplan reminds us of Germany’s Weimar Republic as a place of brilliance and collapse where too many narratives competed, none took hold, and extremism rose in the vacuum. It led to the rise of Nazism and World Word II. That moment mirrors today’s information sphere: over-fragmentation breeds incoherence, and incoherence creates an opening for manipulation.

“When no narrative holds, the loudest one wins.”

As I wrote in Davos 2025: Leadership in Crisis, we are entering a late-phase cycle - economically, institutionally, and culturally. The old levers of power - debt, control, consensus - no longer function as they did.

In such times, leadership must be anchored in strategic clarity and principled action. Not only because it’s right - but because in a quantum world, coherence is survival.

As your future is being narrated in code, in models, in memes. The question is not whether you’ll be mentioned. It’s whether your version of the story will stick. If you want to lead, you need to reclaim the pen!

In a quantum world, what’s true doesn’t always win. But what’s clear, coherent, and deeply human just might.

Build coherence. Defend pluralism. Rethink strategy.

Because in a world where trust is fragmented, narrative is power.

Thanks for reading!

Damien

I am a Senior Technology Advisor who works at the intersection of AI, business transformation, and geopolitics through RebootUp (consulting) and KoncentriK (publication): what I call Technopolitics. I help leaders turn emerging tech into business impact while navigating today’s strategic and systemic risks. Get in touch to know more damien.kopp@rebootup.com

A couple of thoughts and questions on this fascinating piece.

First, it’s be interesting to see how other LLMs behave from a cultural standpoint. I am referring to DeepSeek here.

Second (and this is unfortunately wishful thinking only), the battle for trust would be a lot healthier if everybody was able to think more critically. It’d be a great step forward to know that content is AI-generated before taking it at face value.